A significant number of network engineers still manually configure VLANs and network policies across multiple hypervisors, unaware that they're creating a maintenance nightmare that compounds with every new virtual machine deployment. Yet, surprisingly, many organizations continue to rely on traditional networking approaches even as their virtualized infrastructure grows exponentially complex.

In all likelihood, you would also be surprised to discover how much time your network team spends troubleshooting connectivity issues that stem from inconsistent configurations across your virtual infrastructure. These are some of the very basic network virtualization challenges that organizations of all scales tackle when managing modern data centers. However, these initial observations only scratch the surface of what's possible when you embrace software-defined networking through Open vSwitch (OVS) and Open Virtual Network (OVN).

In this article, we discuss key considerations for implementing OVS and OVN to effectively address network virtualization challenges, optimize your network operations, and create a more agile infrastructure.

Summary of Key Considerations for OVS and OVN Implementation

| Key Consideration | Description |

|---|---|

| Assess your current network complexity | Evaluate whether your virtual network requirements justify the learning curve and operational overhead of OVS/OVN |

| Plan for distributed architecture | Design your deployment to leverage OVN's distributed control plane for scalability and fault tolerance |

| Implement gradual migration | Start with OVS for basic switching needs before adding OVN's advanced networking features |

| Monitor performance metrics | Deploy comprehensive monitoring to track flow tables, connection tracking, and overall network performance |

| Automate configuration management | Use declarative configuration approaches to maintain consistency across your virtual network infrastructure |

Understanding the OVS and OVN Relationship

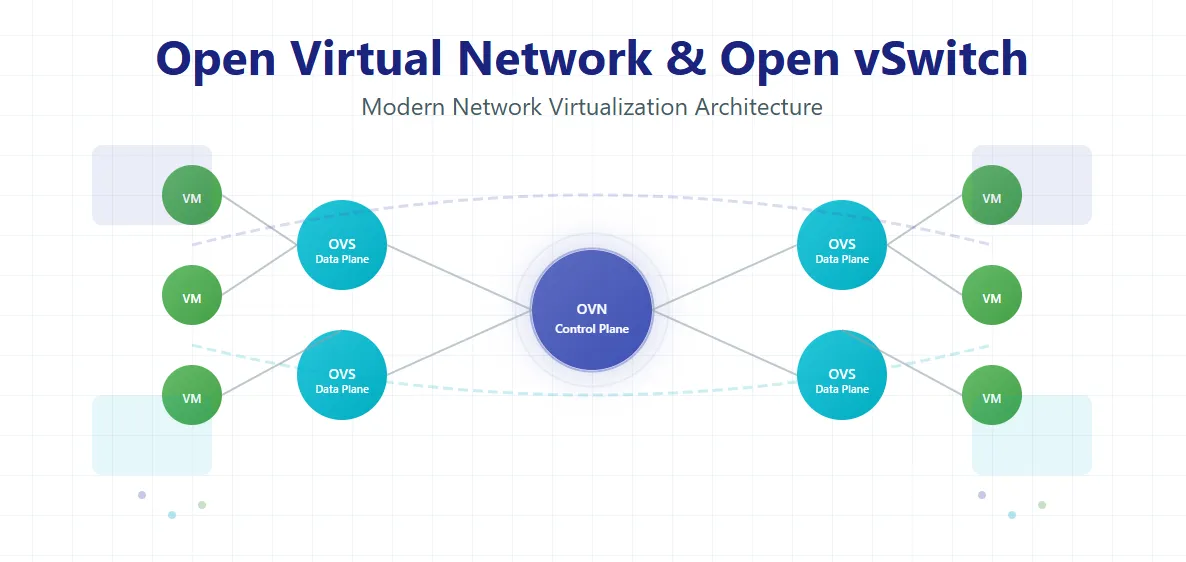

Before diving into implementation details, a big thing to think about is how OVS and OVN work together in your virtualization stack. Planning the deployment is often pretty straightforward conceptually, but getting all the components to work harmoniously requires understanding their distinct roles.

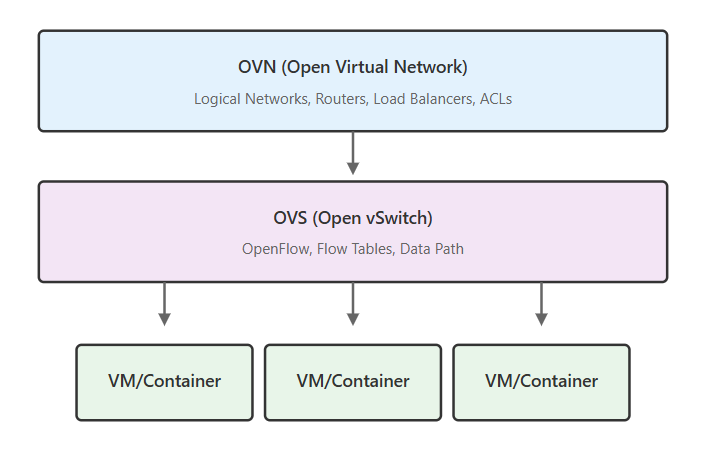

Open vSwitch serves as the foundation - it's the actual data plane that handles packet forwarding in your virtual environment. Think of it as the engine that moves network traffic between virtual machines, containers, and physical networks. OVS replaces the basic Linux bridge with a feature-rich, programmable switch that understands OpenFlow and can handle complex forwarding decisions.

OVN builds on top of OVS to provide the network virtualization layer. While OVS gives you a powerful switch, OVN gives you an entire virtual network infrastructure. It abstracts away the complexity of configuring individual switches and provides logical networks, routers, load balancers, and security policies that span across your entire infrastructure.

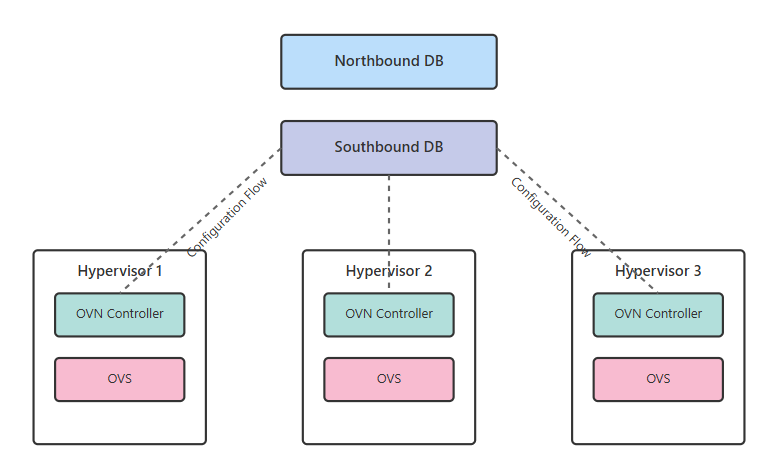

The way this integration usually works is by having OVN's components manage the OVS instances across your environment. These include the OVN Northbound Database for storing your desired network configuration, the OVN Southbound Database for tracking the actual network state, and controller daemons on each hypervisor that program the local OVS instances. The more tightly integrated these components are, the better your virtual network can scale and adapt.

Key Considerations Before Implementing OVS and OVN

The ecosystem of network virtualization solutions will likely present enticing promises of simplified operations and infinite scalability. However, beyond the surface-level offerings, understanding when and how to implement OVS and OVN requires careful consideration of your specific needs.

Network Scale and Complexity Requirements

An OVS/OVN deployment's value proposition is easy to assess, though the primary consideration is whether your network complexity truly warrants the implementation overhead. Managing virtual networks used to be complex with proprietary solutions, but with open-source standardized approaches like OVS and OVN, organizations now have clear paths for implementing software-defined networking.

You would be surprised to know how often organizations implement OVS/OVN when simpler solutions would suffice. The trick is to evaluate your actual requirements: Are you managing hundreds of virtual networks across multiple data centers? Do you need microsegmentation with granular security policies? Is multi-tenancy a core requirement? If you're answering yes to these questions, OVS/OVN becomes compelling.

Performance Optimization Strategies

Some practitioners may not realize that OVS performance tuning is essential for production deployments. Having a properly sized flow table cache can dramatically impact packet forwarding performance. It's important to note that the default configurations aren't optimized for high-throughput environments but rather for compatibility across diverse deployments.

The floating between kernel and userspace processing needs careful consideration. When packets match existing flows in the kernel datapath, you get near line-rate performance. However, when packets need to be processed in userspace, performance can drop significantly. Your deployment should minimize userspace processing by ensuring common traffic patterns have corresponding kernel flows.

A key characteristic of OVN deployments is the distributed nature of the control plane. For context, each hypervisor runs its own OVN controller that receives updates from the central databases. Does your infrastructure have sufficient CPU resources on each hypervisor to handle this processing? If not, you might experience degraded network performance during configuration changes or high churn periods.

Integration with Existing Infrastructure

Many teams still manage network configurations through traditional methods due to familiarity, tooling investments, or organizational inertia. One of the must-have features when deploying OVS/OVN is seamless integration with your existing physical network infrastructure. If you are evaluating different deployment models, make sure to test how OVN handles interaction with your physical switches, especially around VLAN tagging and link aggregation.

Manual configuration of network policies becomes untenable as your virtual infrastructure grows. Advanced OVS/OVN deployments provide centralized policy management through the northbound API, but the real value comes from integration with your orchestration platform. Whether you're using OpenStack, Kubernetes, or another platform, ensuring tight integration eliminates configuration drift and enables true infrastructure as code.

For more accurate network management beyond basic connectivity, consider features that enable sophisticated traffic engineering:

- Quality of Service (QoS) policies that ensure critical workloads get necessary bandwidth

- Connection tracking for stateful firewall rules without external appliances

- Load balancing capabilities that eliminate the need for separate load balancer instances

- Network function virtualization (NFV) support for running virtual network appliances

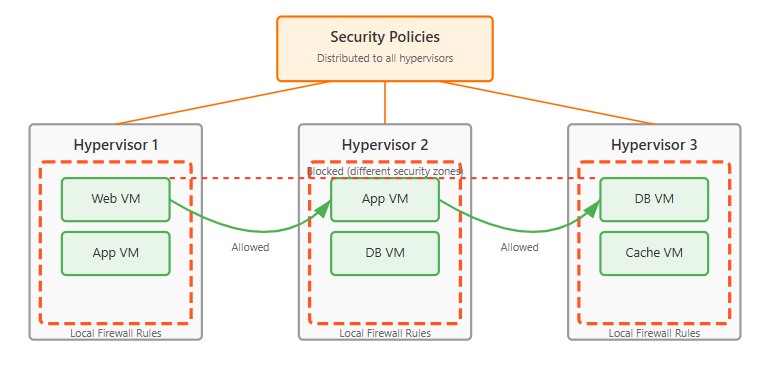

Distributed Security Architecture

Traditional network security relies on perimeter defenses and centralized firewalls. OVN enables a fundamentally different approach where security policies are distributed and enforced at each hypervisor. Your implementation should tie each virtual port to specific security policies, implementing microsegmentation without traffic hairpinning through central appliances.

In some cases, organizations struggle to map their existing security models to OVN's distributed approach. However, the investment in rethinking security architecture pays dividends through reduced attack surfaces and eliminated bottlenecks. Advanced implementations provide policy groups that span multiple networks, essentially helping you to maintain consistent security postures across diverse workloads.

Multi-site and Hybrid Cloud Considerations

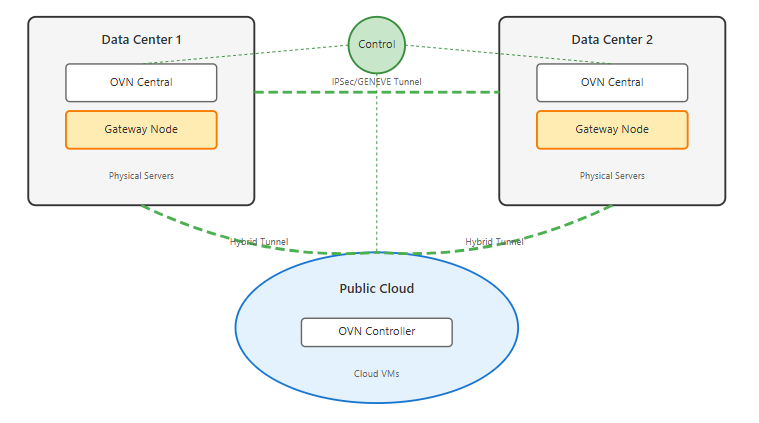

Traditional virtualization platforms struggle with multi-site deployments that most organizations now require. It's not enough if your solution only works well within a single data center, but must also handle stretched networks across sites and hybrid cloud scenarios.

The differences matter because multi-site networks require handling on an entirely different scale, like optimizing inter-site traffic, maintaining consistency during network partitions, and providing location-aware services. On the surface, connecting two OVN deployments might seem straightforward. However, in practice, considerations around gateway placement, tunnel overhead, and failure scenarios require careful planning.

You absolutely need a deployment approach that treats both on-premises and cloud-based resources under a unified network model. When designing your architecture, don't just look for basic connectivity between sites. Think bigger. The ideal deployment should provide intelligent traffic routing that keeps local traffic local, seamless workload mobility between sites, and consistent policy enforcement regardless of workload location.

Conclusion

The long-term success of an OVS/OVN deployment often comes down to operational excellence and team expertise. While the technical capabilities of these technologies are impressive, the quality of your implementation, your team's understanding of software-defined networking concepts, and your commitment to automation can be the genuine differentiators that lead to operational efficiency and infrastructure agility over time.

When planning your deployment, focus on the top must-have capabilities for your environment. These might be distributed load balancing for container workloads, sophisticated traffic engineering for multi-tenant environments, or seamless integration with your existing network automation tools.

Remember that OVS and OVN represent a fundamental shift in how we think about networking. Rather than managing individual network devices, you're now managing a logical network abstraction that can adapt dynamically to your workload requirements. This paradigm shift requires investment in skills and processes, but the payoff in terms of agility and operational efficiency makes it worthwhile for organizations operating at scale.